We have installed and tested if the SSD works in the last post. Now we will train a model for ssd_keras.

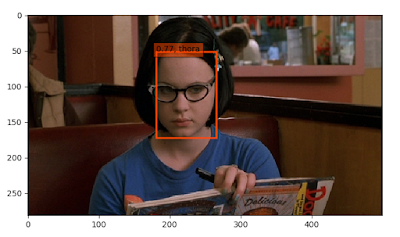

At first we need an dataset. I downloaded 120 pics (.jpg) of Thora Birch of Ghost World.

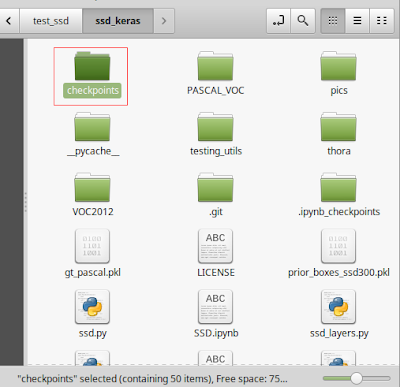

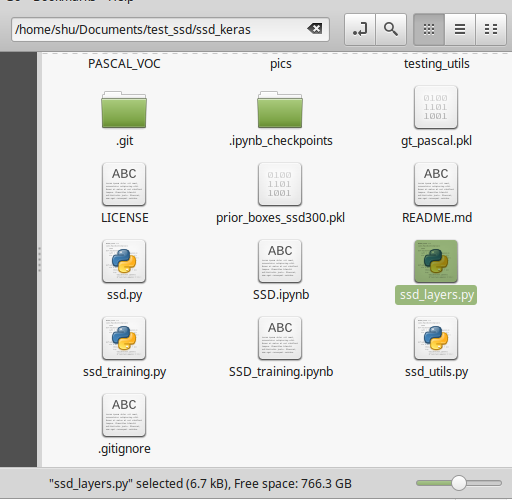

I made a folder like this:

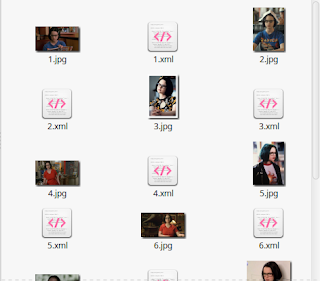

And pictures are in the folder:

From these pictures, we will make a dataset.

$ git clone https://github.com/tzutalin/labelImg.git

$ cd labelImg

$ python3.5 labelImg.py

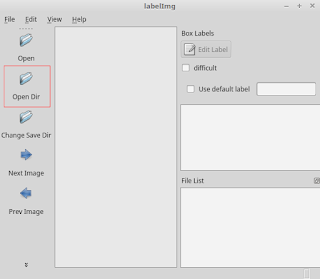

labelImg will open. Select "Open Dir".

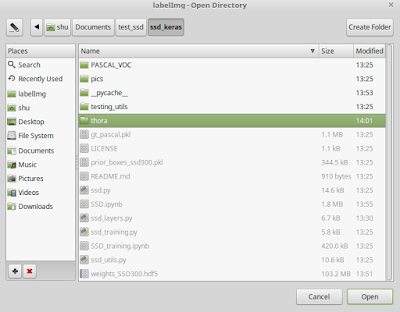

Choose the thora folder.

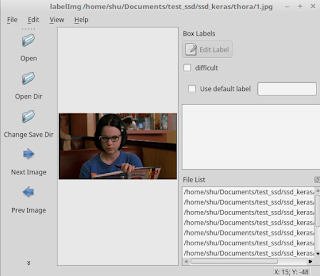

Opened.

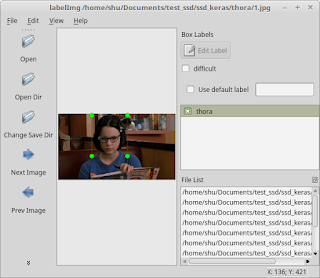

From the top of window, "Edit" -> "Create Rectbox". And create a rectangle box on her face. The category name is "thora".

Create rectbox on other 119 pictures of her also.

Finished.

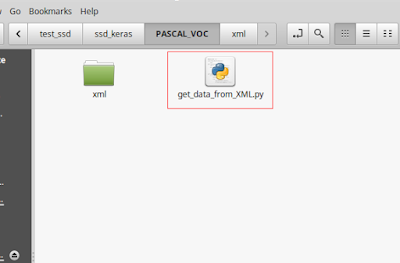

Move all of xml file of thora we created into the xml folder.

We need to change get_data_from_XML.py a bit. Use this code:

import numpy as np

import os

from xml.etree import ElementTree

class XML_preprocessor(object):

def __init__(self, data_path):

self.path_prefix = data_path

self.num_classes = 1 ##changed num of the classes

self.data = dict()

self._preprocess_XML()

def _preprocess_XML(self):

filenames = os.listdir(self.path_prefix)

for filename in filenames:

tree = ElementTree.parse(self.path_prefix + filename)

root = tree.getroot()

bounding_boxes = []

one_hot_classes = []

size_tree = root.find('size')

width = float(size_tree.find('width').text)

height = float(size_tree.find('height').text)

for object_tree in root.findall('object'):

for bounding_box in object_tree.iter('bndbox'):

xmin = float(bounding_box.find('xmin').text)/width

ymin = float(bounding_box.find('ymin').text)/height

xmax = float(bounding_box.find('xmax').text)/width

ymax = float(bounding_box.find('ymax').text)/height

bounding_box = [xmin,ymin,xmax,ymax]

bounding_boxes.append(bounding_box)

class_name = object_tree.find('name').text

one_hot_class = self._to_one_hot(class_name)

one_hot_classes.append(one_hot_class)

image_name = root.find('filename').text

bounding_boxes = np.asarray(bounding_boxes)

one_hot_classes = np.asarray(one_hot_classes)

image_data = np.hstack((bounding_boxes, one_hot_classes))

self.data[image_name] = image_data

## Changed to one category.

def _to_one_hot(self,name):

one_hot_vector = [0] * self.num_classes

if name == 'thora':

one_hot_vector[0] = 1

else:

print('unknown label: %s' %name)

return one_hot_vector

## example on how to use it

import pickle

data = XML_preprocessor('./xml/').data

pickle.dump(data,open('thora.pkl','wb'))

It isn't so big change. I just changed the number of categories to 1 (thora) and made it to generate thoara.pkl. Delete the original code of get_data_from_XML.py and copy and paste this code into the file.import os

from xml.etree import ElementTree

class XML_preprocessor(object):

def __init__(self, data_path):

self.path_prefix = data_path

self.num_classes = 1 ##changed num of the classes

self.data = dict()

self._preprocess_XML()

def _preprocess_XML(self):

filenames = os.listdir(self.path_prefix)

for filename in filenames:

tree = ElementTree.parse(self.path_prefix + filename)

root = tree.getroot()

bounding_boxes = []

one_hot_classes = []

size_tree = root.find('size')

width = float(size_tree.find('width').text)

height = float(size_tree.find('height').text)

for object_tree in root.findall('object'):

for bounding_box in object_tree.iter('bndbox'):

xmin = float(bounding_box.find('xmin').text)/width

ymin = float(bounding_box.find('ymin').text)/height

xmax = float(bounding_box.find('xmax').text)/width

ymax = float(bounding_box.find('ymax').text)/height

bounding_box = [xmin,ymin,xmax,ymax]

bounding_boxes.append(bounding_box)

class_name = object_tree.find('name').text

one_hot_class = self._to_one_hot(class_name)

one_hot_classes.append(one_hot_class)

image_name = root.find('filename').text

bounding_boxes = np.asarray(bounding_boxes)

one_hot_classes = np.asarray(one_hot_classes)

image_data = np.hstack((bounding_boxes, one_hot_classes))

self.data[image_name] = image_data

## Changed to one category.

def _to_one_hot(self,name):

one_hot_vector = [0] * self.num_classes

if name == 'thora':

one_hot_vector[0] = 1

else:

print('unknown label: %s' %name)

return one_hot_vector

## example on how to use it

import pickle

data = XML_preprocessor('./xml/').data

pickle.dump(data,open('thora.pkl','wb'))

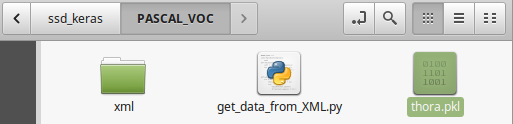

And run it:

$ python3.5 python3.5 get_data_from_XML.py

You will get thora.pkl.

Move it to ssd_keras folder.

Now you are ready to use the .pkl file and its dataset. But we need to configure the pyrhon file to use the .pkl file and the dataset.

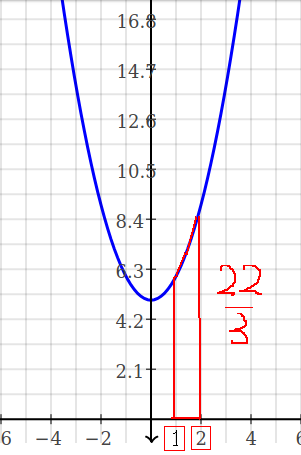

Go to the directory of SSD on console.

$ cd your/directory/to/ssd_keras

$ jupyter notebook

$ jupyter notebook

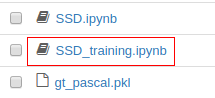

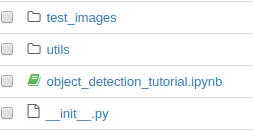

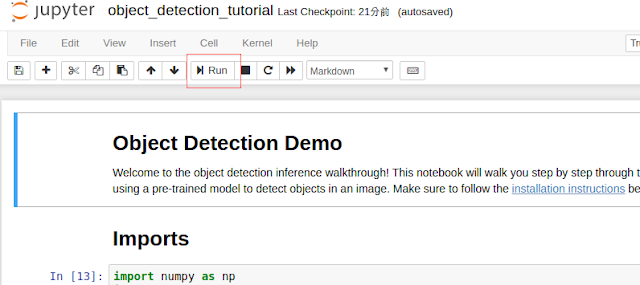

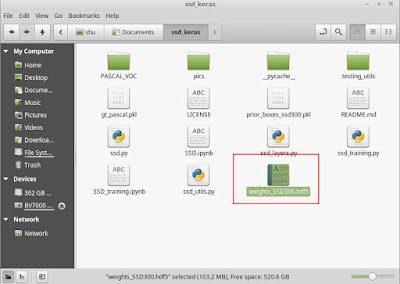

And you will see this:

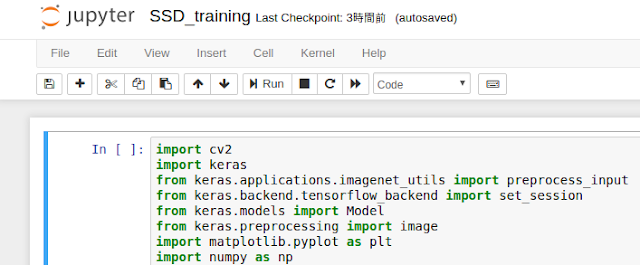

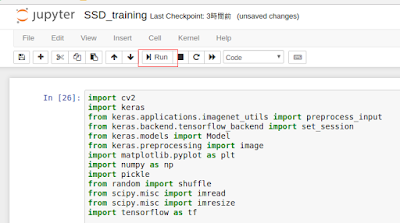

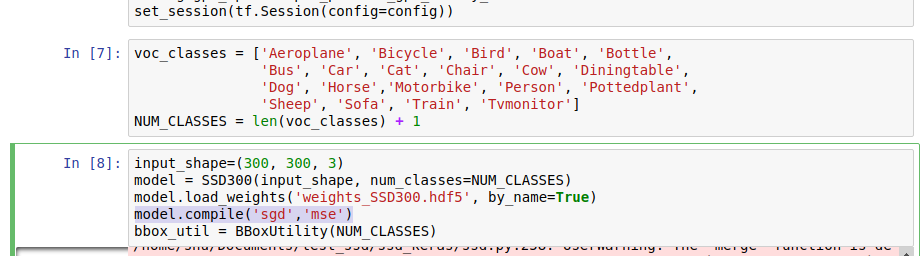

Open SSD_training.ipynb.

You will see this:

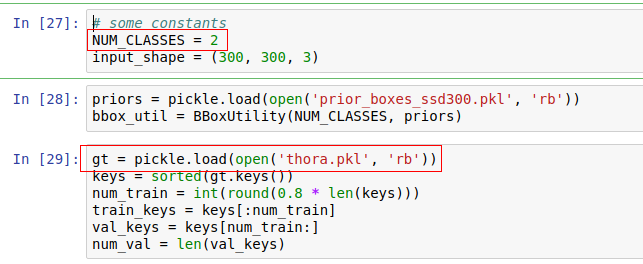

Change the number of classes to "2" and the pkl file to load to "thora.pkl".

2 Classes: thora class and others class. Others class is always needed.

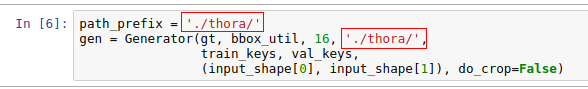

Also change the path_prefix and the path passed to Generator. Please note that the number 16 passed in Generator is a batch size (which means how many pictures you load at once for training). If the number of picture samples are not enough in the dataset, decrease it to smaller number. The bigger the batch size is, the better result you tend to have. But you need strong GPU if the batch size is too big.

Before running the program, make sure that you made "checkpoints" folder in ssd_keras. Learned weight will be saved in this folder (every epoch).

Run the program.

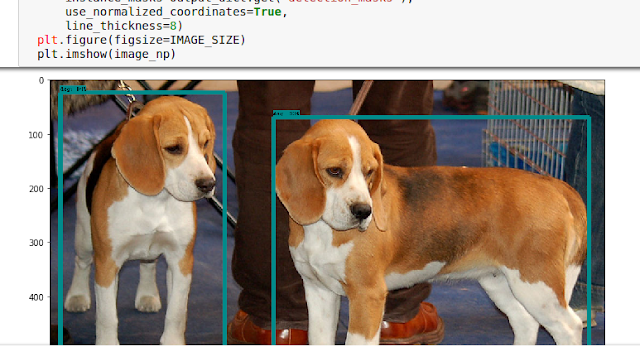

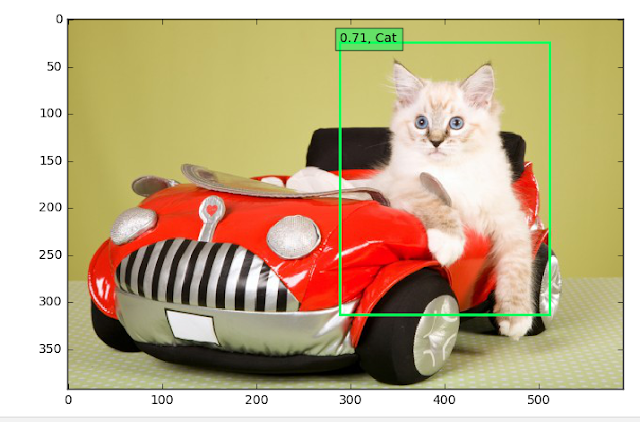

The result:

You can transform .ipynb file to .py file this way:

$ jupyter nbconvert --to python SSD_training.ipynb

My code transformed for your reference (.py):

# coding: utf-8

# In[24]:

import cv2

import keras

from keras.applications.imagenet_utils import preprocess_input

from keras.backend.tensorflow_backend import set_session

from keras.models import Model

from keras.preprocessing import image

import matplotlib.pyplot as plt

import numpy as np

import pickle

from random import shuffle

from scipy.misc import imread

from scipy.misc import imresize

import tensorflow as tf

from ssd import SSD300

from ssd_training import MultiboxLoss

from ssd_utils import BBoxUtility

#get_ipython().run_line_magic('matplotlib', 'inline')

plt.rcParams['figure.figsize'] = (8, 8)

plt.rcParams['image.interpolation'] = 'nearest'

np.set_printoptions(suppress=True)

# config = tf.ConfigProto()

# config.gpu_options.per_process_gpu_memory_fraction = 0.9

# set_session(tf.Session(config=config))

# In[25]:

# some constants

NUM_CLASSES = 2

input_shape = (300, 300, 3)

# In[26]:

priors = pickle.load(open('prior_boxes_ssd300.pkl', 'rb'))

bbox_util = BBoxUtility(NUM_CLASSES, priors)

# In[27]:

gt = pickle.load(open('thora.pkl', 'rb'))

keys = sorted(gt.keys())

num_train = int(round(0.9 * len(keys)))

train_keys = keys[:num_train]

val_keys = keys[num_train:]

num_val = len(val_keys)

# In[28]:

class Generator(object):

def __init__(self, gt, bbox_util,

batch_size, path_prefix,

train_keys, val_keys, image_size,

saturation_var=0.5,

brightness_var=0.5,

contrast_var=0.5,

lighting_std=0.5,

hflip_prob=0.5,

vflip_prob=0.5,

do_crop=True,

crop_area_range=[0.75, 1.0],

aspect_ratio_range=[3./4., 4./3.]):

self.gt = gt

self.bbox_util = bbox_util

self.batch_size = batch_size

self.path_prefix = path_prefix

self.train_keys = train_keys

self.val_keys = val_keys

self.train_batches = len(train_keys)

self.val_batches = len(val_keys)

self.image_size = image_size

self.color_jitter = []

if saturation_var:

self.saturation_var = saturation_var

self.color_jitter.append(self.saturation)

if brightness_var:

self.brightness_var = brightness_var

self.color_jitter.append(self.brightness)

if contrast_var:

self.contrast_var = contrast_var

self.color_jitter.append(self.contrast)

self.lighting_std = lighting_std

self.hflip_prob = hflip_prob

self.vflip_prob = vflip_prob

self.do_crop = do_crop

self.crop_area_range = crop_area_range

self.aspect_ratio_range = aspect_ratio_range

def grayscale(self, rgb):

return rgb.dot([0.299, 0.587, 0.114])

def saturation(self, rgb):

gs = self.grayscale(rgb)

alpha = 2 * np.random.random() * self.saturation_var

alpha += 1 - self.saturation_var

rgb = rgb * alpha + (1 - alpha) * gs[:, :, None]

return np.clip(rgb, 0, 255)

def brightness(self, rgb):

alpha = 2 * np.random.random() * self.brightness_var

alpha += 1 - self.saturation_var

rgb = rgb * alpha

return np.clip(rgb, 0, 255)

def contrast(self, rgb):

gs = self.grayscale(rgb).mean() * np.ones_like(rgb)

alpha = 2 * np.random.random() * self.contrast_var

alpha += 1 - self.contrast_var

rgb = rgb * alpha + (1 - alpha) * gs

return np.clip(rgb, 0, 255)

def lighting(self, img):

cov = np.cov(img.reshape(-1, 3) / 255.0, rowvar=False)

eigval, eigvec = np.linalg.eigh(cov)

noise = np.random.randn(3) * self.lighting_std

noise = eigvec.dot(eigval * noise) * 255

img += noise

return np.clip(img, 0, 255)

def horizontal_flip(self, img, y):

if np.random.random() < self.hflip_prob:

img = img[:, ::-1]

y[:, [0, 2]] = 1 - y[:, [2, 0]]

return img, y

def vertical_flip(self, img, y):

if np.random.random() < self.vflip_prob:

img = img[::-1]

y[:, [1, 3]] = 1 - y[:, [3, 1]]

return img, y

def random_sized_crop(self, img, targets):

img_w = img.shape[1]

img_h = img.shape[0]

img_area = img_w * img_h

random_scale = np.random.random()

random_scale *= (self.crop_area_range[1] -

self.crop_area_range[0])

random_scale += self.crop_area_range[0]

target_area = random_scale * img_area

random_ratio = np.random.random()

random_ratio *= (self.aspect_ratio_range[1] -

self.aspect_ratio_range[0])

random_ratio += self.aspect_ratio_range[0]

w = np.round(np.sqrt(target_area * random_ratio))

h = np.round(np.sqrt(target_area / random_ratio))

if np.random.random() < 0.5:

w, h = h, w

w = min(w, img_w)

w_rel = w / img_w

w = int(w)

h = min(h, img_h)

h_rel = h / img_h

h = int(h)

x = np.random.random() * (img_w - w)

x_rel = x / img_w

x = int(x)

y = np.random.random() * (img_h - h)

y_rel = y / img_h

y = int(y)

img = img[y:y+h, x:x+w]

new_targets = []

for box in targets:

cx = 0.5 * (box[0] + box[2])

cy = 0.5 * (box[1] + box[3])

if (x_rel < cx < x_rel + w_rel and

y_rel < cy < y_rel + h_rel):

xmin = (box[0] - x_rel) / w_rel

ymin = (box[1] - y_rel) / h_rel

xmax = (box[2] - x_rel) / w_rel

ymax = (box[3] - y_rel) / h_rel

xmin = max(0, xmin)

ymin = max(0, ymin)

xmax = min(1, xmax)

ymax = min(1, ymax)

box[:4] = [xmin, ymin, xmax, ymax]

new_targets.append(box)

new_targets = np.asarray(new_targets).reshape(-1, targets.shape[1])

return img, new_targets

def generate(self, train=True):

while True:

if train:

shuffle(self.train_keys)

keys = self.train_keys

else:

shuffle(self.val_keys)

keys = self.val_keys

inputs = []

targets = []

for key in keys:

img_path = self.path_prefix + key

img = imread(img_path).astype('float32')

y = self.gt[key].copy()

if train and self.do_crop:

img, y = self.random_sized_crop(img, y)

img = imresize(img, self.image_size).astype('float32')

if train:

shuffle(self.color_jitter)

for jitter in self.color_jitter:

img = jitter(img)

if self.lighting_std:

img = self.lighting(img)

if self.hflip_prob > 0:

img, y = self.horizontal_flip(img, y)

if self.vflip_prob > 0:

img, y = self.vertical_flip(img, y)

y = self.bbox_util.assign_boxes(y)

inputs.append(img)

targets.append(y)

if len(targets) == self.batch_size:

tmp_inp = np.array(inputs)

tmp_targets = np.array(targets)

inputs = []

targets = []

yield preprocess_input(tmp_inp), tmp_targets

# In[29]:

path_prefix = 'thora/'

gen = Generator(gt, bbox_util, 1, 'thora/',

train_keys, val_keys,

(input_shape[0], input_shape[1]), do_crop=False)

# In[30]:

model = SSD300(input_shape, num_classes=NUM_CLASSES)

#model.load_weights('weights_SSD300.hdf5', by_name=True)

# In[31]:

freeze = ['input_1', 'conv1_1', 'conv1_2', 'pool1',

'conv2_1', 'conv2_2', 'pool2',

'conv3_1', 'conv3_2', 'conv3_3', 'pool3']#,

# 'conv4_1', 'conv4_2', 'conv4_3', 'pool4']

for L in model.layers:

if L.name in freeze:

L.trainable = False

# In[32]:

def schedule(epoch, decay=0.9):

return base_lr * decay**(epoch)

callbacks = [keras.callbacks.ModelCheckpoint('./checkpoints/weights.{epoch:02d}-{val_loss:.2f}.hdf5',

verbose=1,

save_weights_only=True),

keras.callbacks.LearningRateScheduler(schedule)]

# In[33]:

base_lr = 3e-4

optim = keras.optimizers.Adam(lr=base_lr)

# optim = keras.optimizers.RMSprop(lr=base_lr)

# optim = keras.optimizers.SGD(lr=base_lr, momentum=0.9, decay=decay, nesterov=True)

model.compile(optimizer=optim,

loss=MultiboxLoss(NUM_CLASSES, neg_pos_ratio=2.0).compute_loss)

# In[34]:

nb_epoch = 10

history = model.fit_generator(gen.generate(True), gen.train_batches,

nb_epoch, verbose=1,

callbacks=callbacks,

validation_data=gen.generate(False),

nb_val_samples=gen.val_batches,

nb_worker=1)

# In[ ]:

inputs = []

images = []

img_path = path_prefix + sorted(val_keys)[0]

img = image.load_img(img_path, target_size=(300, 300))

img = image.img_to_array(img)

images.append(imread(img_path))

inputs.append(img.copy())

inputs = preprocess_input(np.array(inputs))

# In[35]:

preds = model.predict(inputs, batch_size=1, verbose=1)

results = bbox_util.detection_out(preds)

# In[ ]:

for i, img in enumerate(images):

# Parse the outputs.

det_label = results[i][:, 0]

det_conf = results[i][:, 1]

det_xmin = results[i][:, 2]

det_ymin = results[i][:, 3]

det_xmax = results[i][:, 4]

det_ymax = results[i][:, 5]

# Get detections with confidence higher than 0.6.

top_indices = [i for i, conf in enumerate(det_conf) if conf >= 0.6]

top_conf = det_conf[top_indices]

top_label_indices = det_label[top_indices].tolist()

top_xmin = det_xmin[top_indices]

top_ymin = det_ymin[top_indices]

top_xmax = det_xmax[top_indices]

top_ymax = det_ymax[top_indices]

colors = plt.cm.hsv(np.linspace(0, 1, 4)).tolist()

plt.imshow(img / 255.)

currentAxis = plt.gca()

for i in range(top_conf.shape[0]):

xmin = int(round(top_xmin[i] * img.shape[1]))

ymin = int(round(top_ymin[i] * img.shape[0]))

xmax = int(round(top_xmax[i] * img.shape[1]))

ymax = int(round(top_ymax[i] * img.shape[0]))

score = top_conf[i]

label = int(top_label_indices[i])

# label_name = voc_classes[label - 1]

display_txt = '{:0.2f}, {}'.format(score, label)

coords = (xmin, ymin), xmax-xmin+1, ymax-ymin+1

color = colors[label]

currentAxis.add_patch(plt.Rectangle(*coords, fill=False, edgecolor=color, linewidth=2))

currentAxis.text(xmin, ymin, display_txt, bbox={'facecolor':color, 'alpha':0.5})

plt.show(block=True)

# In[24]:

import cv2

import keras

from keras.applications.imagenet_utils import preprocess_input

from keras.backend.tensorflow_backend import set_session

from keras.models import Model

from keras.preprocessing import image

import matplotlib.pyplot as plt

import numpy as np

import pickle

from random import shuffle

from scipy.misc import imread

from scipy.misc import imresize

import tensorflow as tf

from ssd import SSD300

from ssd_training import MultiboxLoss

from ssd_utils import BBoxUtility

#get_ipython().run_line_magic('matplotlib', 'inline')

plt.rcParams['figure.figsize'] = (8, 8)

plt.rcParams['image.interpolation'] = 'nearest'

np.set_printoptions(suppress=True)

# config = tf.ConfigProto()

# config.gpu_options.per_process_gpu_memory_fraction = 0.9

# set_session(tf.Session(config=config))

# In[25]:

# some constants

NUM_CLASSES = 2

input_shape = (300, 300, 3)

# In[26]:

priors = pickle.load(open('prior_boxes_ssd300.pkl', 'rb'))

bbox_util = BBoxUtility(NUM_CLASSES, priors)

# In[27]:

gt = pickle.load(open('thora.pkl', 'rb'))

keys = sorted(gt.keys())

num_train = int(round(0.9 * len(keys)))

train_keys = keys[:num_train]

val_keys = keys[num_train:]

num_val = len(val_keys)

# In[28]:

class Generator(object):

def __init__(self, gt, bbox_util,

batch_size, path_prefix,

train_keys, val_keys, image_size,

saturation_var=0.5,

brightness_var=0.5,

contrast_var=0.5,

lighting_std=0.5,

hflip_prob=0.5,

vflip_prob=0.5,

do_crop=True,

crop_area_range=[0.75, 1.0],

aspect_ratio_range=[3./4., 4./3.]):

self.gt = gt

self.bbox_util = bbox_util

self.batch_size = batch_size

self.path_prefix = path_prefix

self.train_keys = train_keys

self.val_keys = val_keys

self.train_batches = len(train_keys)

self.val_batches = len(val_keys)

self.image_size = image_size

self.color_jitter = []

if saturation_var:

self.saturation_var = saturation_var

self.color_jitter.append(self.saturation)

if brightness_var:

self.brightness_var = brightness_var

self.color_jitter.append(self.brightness)

if contrast_var:

self.contrast_var = contrast_var

self.color_jitter.append(self.contrast)

self.lighting_std = lighting_std

self.hflip_prob = hflip_prob

self.vflip_prob = vflip_prob

self.do_crop = do_crop

self.crop_area_range = crop_area_range

self.aspect_ratio_range = aspect_ratio_range

def grayscale(self, rgb):

return rgb.dot([0.299, 0.587, 0.114])

def saturation(self, rgb):

gs = self.grayscale(rgb)

alpha = 2 * np.random.random() * self.saturation_var

alpha += 1 - self.saturation_var

rgb = rgb * alpha + (1 - alpha) * gs[:, :, None]

return np.clip(rgb, 0, 255)

def brightness(self, rgb):

alpha = 2 * np.random.random() * self.brightness_var

alpha += 1 - self.saturation_var

rgb = rgb * alpha

return np.clip(rgb, 0, 255)

def contrast(self, rgb):

gs = self.grayscale(rgb).mean() * np.ones_like(rgb)

alpha = 2 * np.random.random() * self.contrast_var

alpha += 1 - self.contrast_var

rgb = rgb * alpha + (1 - alpha) * gs

return np.clip(rgb, 0, 255)

def lighting(self, img):

cov = np.cov(img.reshape(-1, 3) / 255.0, rowvar=False)

eigval, eigvec = np.linalg.eigh(cov)

noise = np.random.randn(3) * self.lighting_std

noise = eigvec.dot(eigval * noise) * 255

img += noise

return np.clip(img, 0, 255)

def horizontal_flip(self, img, y):

if np.random.random() < self.hflip_prob:

img = img[:, ::-1]

y[:, [0, 2]] = 1 - y[:, [2, 0]]

return img, y

def vertical_flip(self, img, y):

if np.random.random() < self.vflip_prob:

img = img[::-1]

y[:, [1, 3]] = 1 - y[:, [3, 1]]

return img, y

def random_sized_crop(self, img, targets):

img_w = img.shape[1]

img_h = img.shape[0]

img_area = img_w * img_h

random_scale = np.random.random()

random_scale *= (self.crop_area_range[1] -

self.crop_area_range[0])

random_scale += self.crop_area_range[0]

target_area = random_scale * img_area

random_ratio = np.random.random()

random_ratio *= (self.aspect_ratio_range[1] -

self.aspect_ratio_range[0])

random_ratio += self.aspect_ratio_range[0]

w = np.round(np.sqrt(target_area * random_ratio))

h = np.round(np.sqrt(target_area / random_ratio))

if np.random.random() < 0.5:

w, h = h, w

w = min(w, img_w)

w_rel = w / img_w

w = int(w)

h = min(h, img_h)

h_rel = h / img_h

h = int(h)

x = np.random.random() * (img_w - w)

x_rel = x / img_w

x = int(x)

y = np.random.random() * (img_h - h)

y_rel = y / img_h

y = int(y)

img = img[y:y+h, x:x+w]

new_targets = []

for box in targets:

cx = 0.5 * (box[0] + box[2])

cy = 0.5 * (box[1] + box[3])

if (x_rel < cx < x_rel + w_rel and

y_rel < cy < y_rel + h_rel):

xmin = (box[0] - x_rel) / w_rel

ymin = (box[1] - y_rel) / h_rel

xmax = (box[2] - x_rel) / w_rel

ymax = (box[3] - y_rel) / h_rel

xmin = max(0, xmin)

ymin = max(0, ymin)

xmax = min(1, xmax)

ymax = min(1, ymax)

box[:4] = [xmin, ymin, xmax, ymax]

new_targets.append(box)

new_targets = np.asarray(new_targets).reshape(-1, targets.shape[1])

return img, new_targets

def generate(self, train=True):

while True:

if train:

shuffle(self.train_keys)

keys = self.train_keys

else:

shuffle(self.val_keys)

keys = self.val_keys

inputs = []

targets = []

for key in keys:

img_path = self.path_prefix + key

img = imread(img_path).astype('float32')

y = self.gt[key].copy()

if train and self.do_crop:

img, y = self.random_sized_crop(img, y)

img = imresize(img, self.image_size).astype('float32')

if train:

shuffle(self.color_jitter)

for jitter in self.color_jitter:

img = jitter(img)

if self.lighting_std:

img = self.lighting(img)

if self.hflip_prob > 0:

img, y = self.horizontal_flip(img, y)

if self.vflip_prob > 0:

img, y = self.vertical_flip(img, y)

y = self.bbox_util.assign_boxes(y)

inputs.append(img)

targets.append(y)

if len(targets) == self.batch_size:

tmp_inp = np.array(inputs)

tmp_targets = np.array(targets)

inputs = []

targets = []

yield preprocess_input(tmp_inp), tmp_targets

# In[29]:

path_prefix = 'thora/'

gen = Generator(gt, bbox_util, 1, 'thora/',

train_keys, val_keys,

(input_shape[0], input_shape[1]), do_crop=False)

# In[30]:

model = SSD300(input_shape, num_classes=NUM_CLASSES)

#model.load_weights('weights_SSD300.hdf5', by_name=True)

# In[31]:

freeze = ['input_1', 'conv1_1', 'conv1_2', 'pool1',

'conv2_1', 'conv2_2', 'pool2',

'conv3_1', 'conv3_2', 'conv3_3', 'pool3']#,

# 'conv4_1', 'conv4_2', 'conv4_3', 'pool4']

for L in model.layers:

if L.name in freeze:

L.trainable = False

# In[32]:

def schedule(epoch, decay=0.9):

return base_lr * decay**(epoch)

callbacks = [keras.callbacks.ModelCheckpoint('./checkpoints/weights.{epoch:02d}-{val_loss:.2f}.hdf5',

verbose=1,

save_weights_only=True),

keras.callbacks.LearningRateScheduler(schedule)]

# In[33]:

base_lr = 3e-4

optim = keras.optimizers.Adam(lr=base_lr)

# optim = keras.optimizers.RMSprop(lr=base_lr)

# optim = keras.optimizers.SGD(lr=base_lr, momentum=0.9, decay=decay, nesterov=True)

model.compile(optimizer=optim,

loss=MultiboxLoss(NUM_CLASSES, neg_pos_ratio=2.0).compute_loss)

# In[34]:

nb_epoch = 10

history = model.fit_generator(gen.generate(True), gen.train_batches,

nb_epoch, verbose=1,

callbacks=callbacks,

validation_data=gen.generate(False),

nb_val_samples=gen.val_batches,

nb_worker=1)

# In[ ]:

inputs = []

images = []

img_path = path_prefix + sorted(val_keys)[0]

img = image.load_img(img_path, target_size=(300, 300))

img = image.img_to_array(img)

images.append(imread(img_path))

inputs.append(img.copy())

inputs = preprocess_input(np.array(inputs))

# In[35]:

preds = model.predict(inputs, batch_size=1, verbose=1)

results = bbox_util.detection_out(preds)

# In[ ]:

for i, img in enumerate(images):

# Parse the outputs.

det_label = results[i][:, 0]

det_conf = results[i][:, 1]

det_xmin = results[i][:, 2]

det_ymin = results[i][:, 3]

det_xmax = results[i][:, 4]

det_ymax = results[i][:, 5]

# Get detections with confidence higher than 0.6.

top_indices = [i for i, conf in enumerate(det_conf) if conf >= 0.6]

top_conf = det_conf[top_indices]

top_label_indices = det_label[top_indices].tolist()

top_xmin = det_xmin[top_indices]

top_ymin = det_ymin[top_indices]

top_xmax = det_xmax[top_indices]

top_ymax = det_ymax[top_indices]

colors = plt.cm.hsv(np.linspace(0, 1, 4)).tolist()

plt.imshow(img / 255.)

currentAxis = plt.gca()

for i in range(top_conf.shape[0]):

xmin = int(round(top_xmin[i] * img.shape[1]))

ymin = int(round(top_ymin[i] * img.shape[0]))

xmax = int(round(top_xmax[i] * img.shape[1]))

ymax = int(round(top_ymax[i] * img.shape[0]))

score = top_conf[i]

label = int(top_label_indices[i])

# label_name = voc_classes[label - 1]

display_txt = '{:0.2f}, {}'.format(score, label)

coords = (xmin, ymin), xmax-xmin+1, ymax-ymin+1

color = colors[label]

currentAxis.add_patch(plt.Rectangle(*coords, fill=False, edgecolor=color, linewidth=2))

currentAxis.text(xmin, ymin, display_txt, bbox={'facecolor':color, 'alpha':0.5})

plt.show(block=True)

Video

If you want to try the trained model on video, at first, download the video.

And use "videotest_example.py" that is in "testing_utils" folder after writing necessary information in the file (like path to the video, path to the learned weight, categories in the model and so on).

But maybe you need to add +1 to num_classes like:

model = SSD(input_shape, num_classes=NUM_CLASSES+1)

Contents

How to use SSD: Single Shot MultiBox Detector

Single Shot Multibox Detector: how to train with own dataset