Contents:

Preparation for coding:

1. Virtual box and Vagrant 2. Install Apache

3. Install MySQL

4. Install PHP

Edit crawler

1. Download and configure for PHPcrawl

2. Editing code of the crawler

3. Save fetched information in a database.

4. Sharing a local website inside a local network (optional)

5. User interface

What is webcrawler?

Webcrawler is a program that crawls on internet and gather information from internet. For example, google crawler is a very famous crawler that collects website information to use the information for Google search.we will modify and improve PHPcrawl to learn how to develop a program with php.

Preparation

Open /etc/php.ini

# vi /etc/php.ini

And change the value of output_buffering to "off":

output_buffering = off

Turning off output_buffering is not essential, but we will make it off for now (because, if it is on, we need to wait for a long time until the crawler finishes crawling and displays the full result. If it is off, the result is displayed one by one).

Glance at the code

If you see inside of PHPCrawl, you can see there are so many classes. You might be scared because you might think you need to understand how all of the classes will work. But you don't need to be scared by how lots of classes are there. When the crawler is working, only if they are needed, these classes are used. What we need to do is just to write how the crawler should work.

Many classes.

What we will do is not to design a new crawler, but just to modify an existing crawler and make it an original one. (Actually many creative products are made from open source projects.)

Edit the code

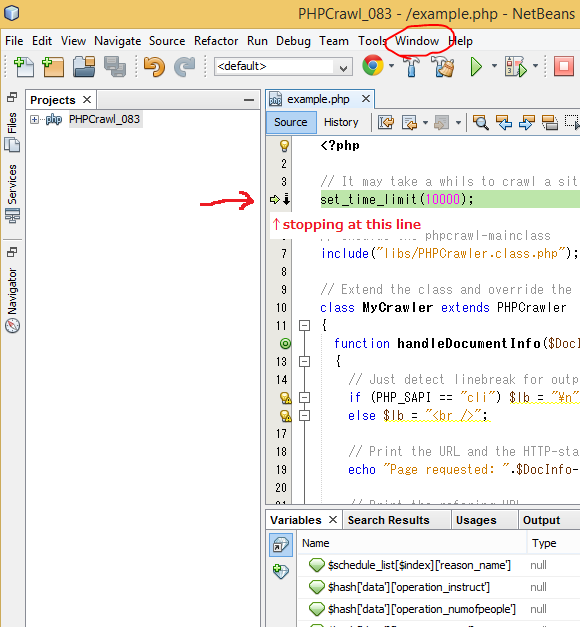

At first, double-click "example.php" on "Projects" panel. Then example.php will show up in the code editor.From this code editor, you can edit the example.php's code. Anyway, at first, we will see how it works. Click the debug button to start the crawler.

If you have checked "stop at first line", it would stop at the first line. But before stepping forward, maybe click "Window" from the menu.

Click "Debugging" => "Variables"

While the execution is being suspended at the first line, the browser keeps displaying "Loading" on the tab. The content is, of course, blank. Because no code to display html or words are executed yet.

html is a kind of programming language that is used to build a static web page. (Static webpages are ones like this blog, which just shows words on browser. Dynamic webpages are ones like Google. Google dynamically does many things like searching a certain word... Script languages like PHP are used to build dynamic webpages.)

Press F7 on the keyboard or click the down arrow to step forward. If you want to jump to the end of the execution, click the green play button. You can see how this program is executed and which class and method are used for the execution. For now, we don't need to check in detail, so just click the green button.

You will see the crawler will fetch data page by page every 5 seconds (if not, maybe output_buffering in php.ini isn't set to off):

You shouldn't decrease the seconds for the every execution because, if it is too fast, it can damage the web server. (It can be regarded as even an attack sometimes, so be careful.)

Maybe we need also megabyte for the result summary, not only byte of how much data is processed.

So we change the last part of the code of example.php like this:

echo "Summary:".$lb;

echo "Links followed: ".$report->links_followed.$lb;

echo "Documents received: ".$report->files_received.$lb;

$byteData = $report->bytes_received;

$megabyteData = $byteData/1024/1024;

echo "Bytes received: ".$byteData." bytes".$lb;

echo "Megabytes received: ".$megabyteData." megabytes".$lb;

echo "Process runtime: ".$report->process_runtime." sec".$lb;

echo "Links followed: ".$report->links_followed.$lb;

echo "Documents received: ".$report->files_received.$lb;

$byteData = $report->bytes_received;

$megabyteData = $byteData/1024/1024;

echo "Bytes received: ".$byteData." bytes".$lb;

echo "Megabytes received: ".$megabyteData." megabytes".$lb;

echo "Process runtime: ".$report->process_runtime." sec".$lb;

As you see, if it is a small change, it is quite easy to accomplish. :)

You want to make the crawler to fetch only PDF files? Such changes are even easier because fetching only PDF files is supported by default (original creator had prepared such method beforehand, so all we need to do is to call such method). But if you want changes that are not supported by default, it is where we need to develop by ourselves.